A respite from algorithmic violence: Memes, platforms and content moderation

Idil Galip

Artwork

AI Violence

Language :

en

article artwork categories-classifications content-moderation datasets identity meme oppression natural-language-processing pop-culture racism reality shadow-banning social-media sexism

Meme contributions by @ada.wrong, @bundaskanzlerin, @saqmemes

Artificial intelligence (AI) is used for a variety of purposes in modern societies. One example of AI is machine learning where machines are trained to make judgments about objects, people, places and other variables and materialities like humans do. Computers are fed large quantities of data, from databases or repositories and asked to identify patterns. As a result of machine learning, many jobs that humans do can be automated.

These jobs can range from content moderation on social media platforms to the screening of job applications, to deploying police surveillance, and facilitating digital payments. These systems can almost be thought of as children who learn how to be in the world as a social being, through their environments and the interactions they have with the people who inhabit those environments. But ultimately, children are impressionable, and so are machines. When fed masses of data from the chaotic and serpentine pits of the world wide web, they can often become a reflection of the turbulent world that they feed from.

In 2017, Aylin Çalışkan, a computer scientist at the University of Washington, and her colleagues published an article where they showed that “standard machine learning can acquire stereotyped biases from textual data that reflect everyday human culture”. They demonstrated that a machine which was teaching itself the English language, absorbed biases present in everyday cultures and started associating “unpleasantness” with Black American names. In a 2021 report about AI and bias, Çalışkan highlighted that the biased decisions of natural language processing (NLP), a branch of computer science which is concerned with teaching computers to understand and reproduce human language and speech, “not only perpetuates historical biases and injustices, but potentially amplif[ies] existing biases at an unprecedented scale and speed”. These amplified biases have dire consequences on already marginalised people who have been subject to historical injustices. Some of these biased decisions have serious consequences on people’s safety, health, and welfare. While others might have less grievous yet piece-meal, everyday effects which can result in the loss of livelihoods and income for people who create online content on social media platforms.

A combination of machine and human policing is used on platforms such as Instagram, Facebook, Twitter and Youtube to moderate content. The exact details of how content is moderated on these platforms is largely kept vague and opaque, and creators feel like they are kept in the dark about how the “algorithm” operates. “The algorithm” which users and creators believe is in charge of who gets to be visible or suppressed on the platform has an almost mythical and towering shadow over certain spaces, such as Instagram. Often perceived as an all-knowing, omniscient yet unjust power, the algorithm gives and takes according to its will. For creators who depend on their online visibility for part or the entirety of their income, this is akin to having a workplace where the furniture is rearranged every day, colleagues are fired and re-hired every week, the marker for “good work” is always unclear and the boss gaslights its workers in unpredictably bizarre ways.

In 2020, a social media campaign led forth by Nyome Nicholas-Williams called #IWantToSeeNyome prompted Instagram to change its rules around nudity. This campaign was started as a result of Instagram flagging and removing a semi-nude photograph of Nicholas-Williams, who is a black, plus-sized woman. After the removal, the social media platform also notified that her account might get taken down for a community guidelines violation. Nicholas-Williams’ occupation as a model and influencer means that she depends on her online visibility, like many other content creators. The platform bias she has experienced, which is due to human and/or machine policing of content on Instagram, is mirrored in the experiences of users whose bodies and identities fall outside of what the platform deems as acceptable. How “The Algorithm” decided to suppress Nicholas-Williams is unclear, yet it is a common occurrence among creators whose voices would have been suppressed in our pre-online worlds. Ysabel Gerrard, lecturer in digital media and society at the University of Sheffield, and Helen Thornham, associate professor in digital cultures at the University of Leeds describe this particular way that human and machine moderators converge in perpetuating biases and “normative gender roles”, especially white femininities, as “sexist assemblages” (2020). This framework accounts not only for the seemingly non-human spectre of The Algorithm, but also the human moderators, platforms’ community guidelines as well as its users who also engage in policing each other. Viewing algorithmic bias on platforms not as an inscrutable repercussion of machine learning but as a result of the intersection of a multiplicity of actors, can aid us in addressing the moving parts of online inequalities with more confidence. The veil can only be lifted off the enigmatic algorithm by addressing the humans who maintain it.

Naya, @bundaskanzlerin on Instagram, is a comedian and multimedia storyteller who utilises her sharp wit to deliver nuanced parables of living as “the other” in German society. Her video meme here shows her reckoning with the fact that she has been shadow-banned and the realisation that she must now offer a sacrifice to the platform, in the form of her posts, to retrieve her platform visibility.

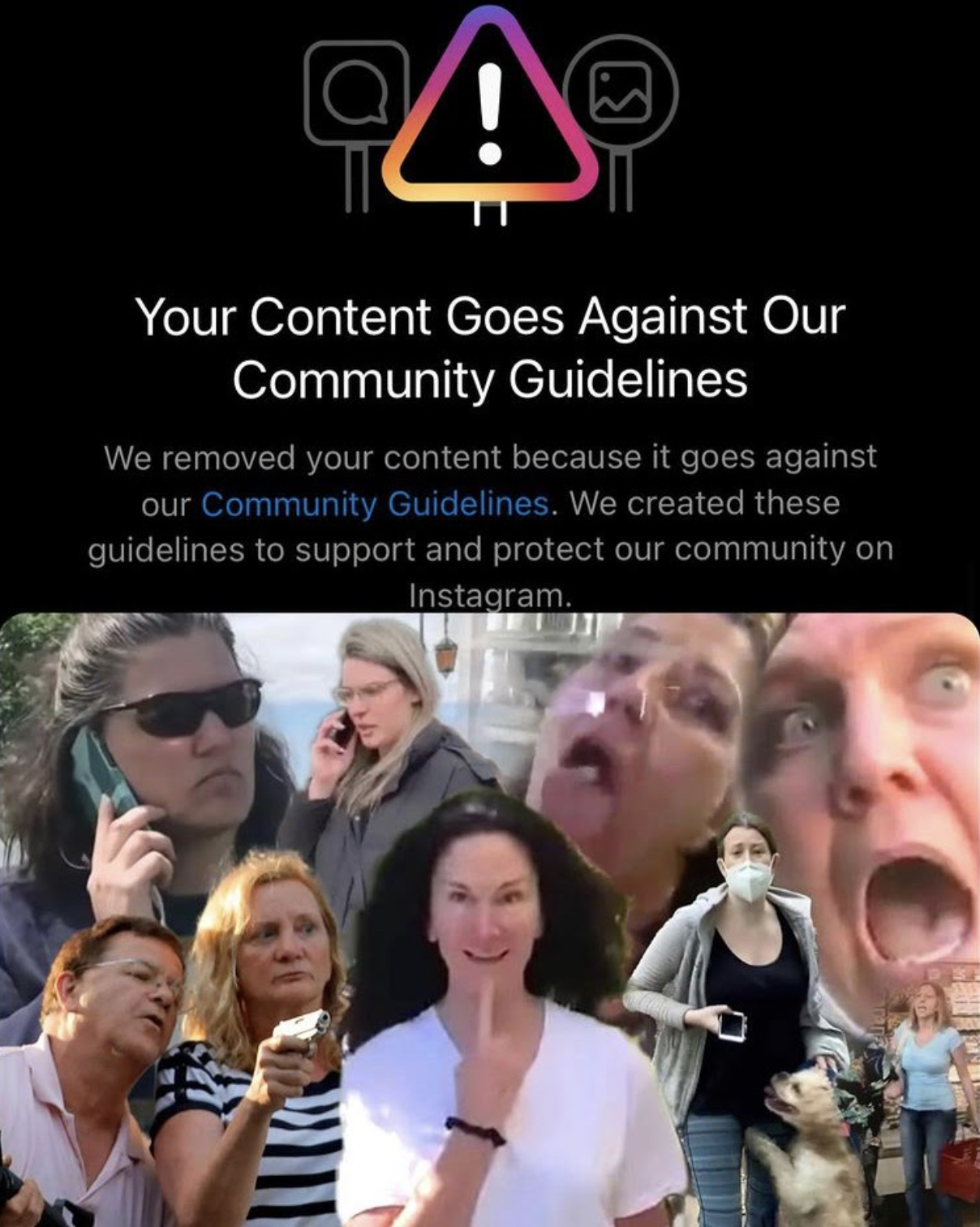

In the second image, Naya juxtaposes the community guidelines violation notification with the post that triggered this violation in question. Within this meme, the sexist assemblages of platform moderation (Gerrard and Thornham, 2020) are represented by a collage of “Karen”s: a name used to refer to entitled white women who unleash “the violent history of white womanhood” (Lang 2020) and seek to police the existence and behaviour of minorities.

Following these discussions, we might wonder how we can further resist against platform bias. For instance, Nicholas-Williams had people rallying behind her to get her photograph reinstated and the campaign ultimately helped raise awareness of an issue that has been plaguing black and brown people online for years by putting it in the forefront of popular consciousness, even for a few months. Another similar initiative came from an unexpected direction in 2019, when a page titled @unionizedmemes emerged on Instagram. Calling for the “memers of the world” to unite, this group of meme makers were fed up of being shadow-banned and having their accounts deactivated. One of their posts dated April 10, 2019 reads:

“The ig meme union is an effort to foster solidarity among memers. This is an attempt to break cycles of retaliation and selective censorship by Instagram. These actions on the part of ig potentially put the livelihoods and careers of meme lords at risk, while profiting off of the labor of said clout queens n kings”. — @unionizedmemes

The demands of this, now seemingly defunct, Memers’ Union were mainly the reinstatement of their censored members’ meme pages. After receiving coverage from various publications such as Vox and The Atlantic, several meme makers’ pages were reactivated by Instagram. One of those people was Omnia, a meme creator who now runs @saqmemes on Instagram. The memes below are her original work and all relate to the issues of algorithmic bias and governance on social media platforms like Instagram.

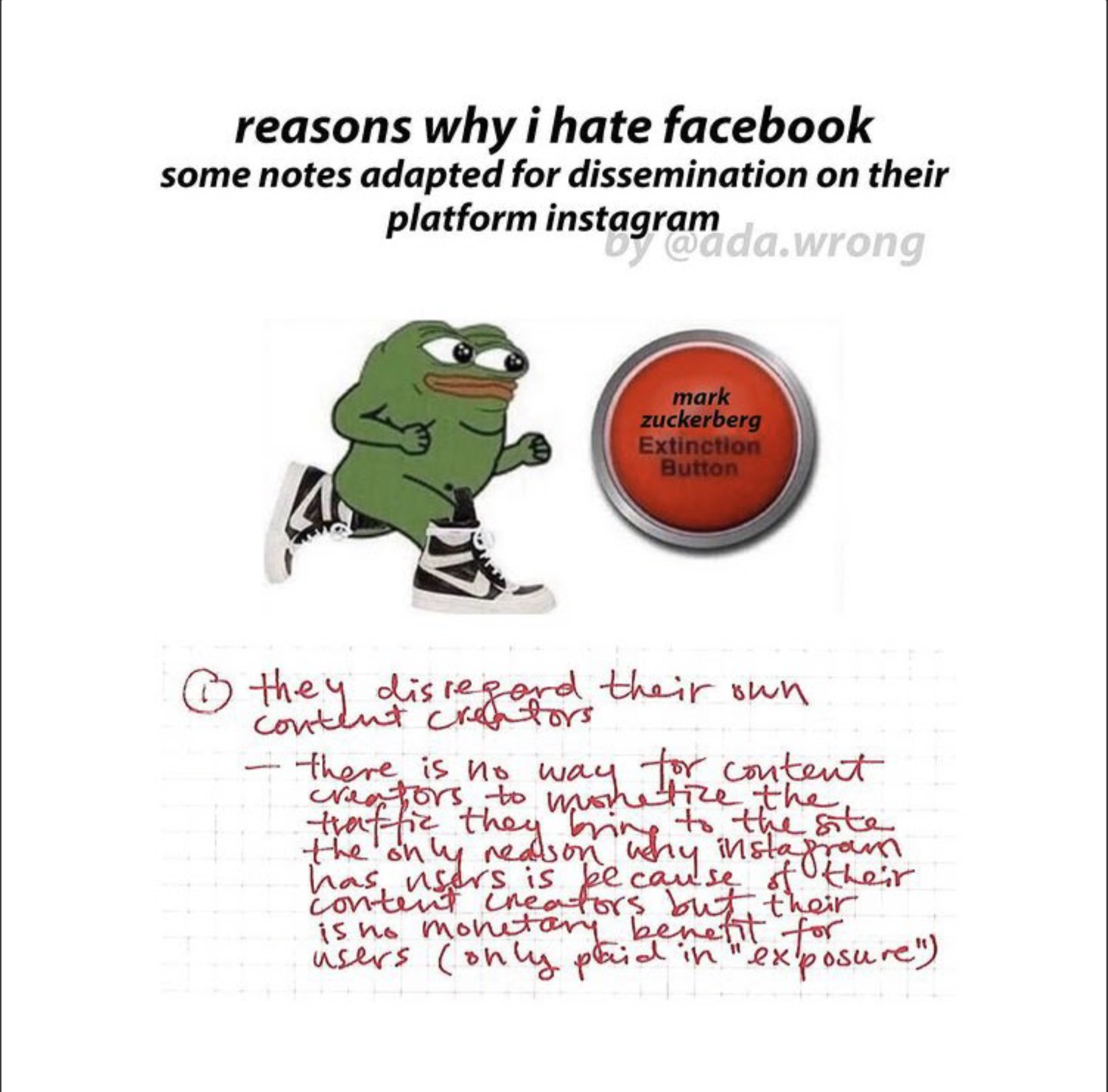

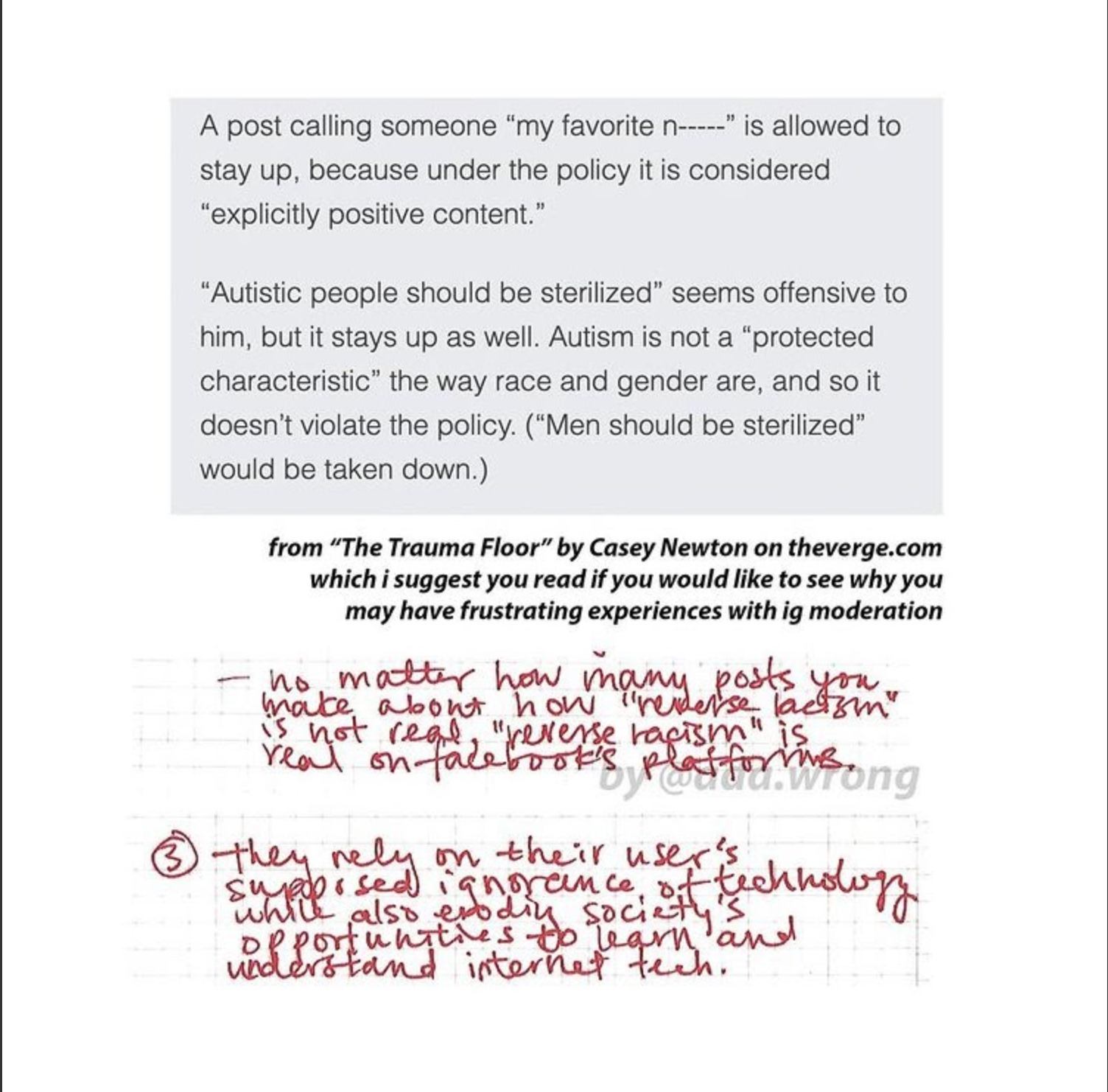

Similarly, Jillian @ada.wrong, on Instagram, shares her notes on what it means to be a content creator navigating the ever-changing community guidelines and moderation processes on Instagram in these slides below.

Resisting algorithmic bias online is much more difficult on platforms that have enormous user bases and subsequently make frequent use of machine policing to moderate the vast numbers of users who utilise their services. In their 2020 study of algorithmic content moderation, researchers Robert Gorwa, Reuben Binns and Christian Katzenbach highlighted the need for increased regulation of algorithmic moderation systems, which they characterise as “unaccountable” and “poorly understood”. Raising awareness about algorithmic bias and demanding responsiveness and accountability from larger platforms is important, however so is building alternative online spaces which extend beyond the jurisdiction of Facebook, and its growing influence over the internet. While more time and effort-intensive constructing and maintaining smaller online spaces by and for our own communities can offer a respite from ongoing large platform governance and surveillance. Larger social media platforms govern their users in ways that replicate existent unequal systems, so how do we attempt to extract ourselves out of these systems? Community initiatives seem to be a tangible and sustainable solution to our platform ennui.

One such example is virtualgoodsdealer, a multimedia platform run by Jillian of @ada.wrong, Omnia of @saqmemes and Cindie of @males_are_cancelled. As a curated and mindful alternative to visual media platforms, the virtualgoodsdealer website is full of digital installations, exhibitions, essays, goods, and artistic material. For instance, their first exhibition “Deleted in 2020” spoke directly to the online policing, algorithmic and human, of content posted on Instagram. The description of the exhibition reads “‘Deleted in 2020’ is a collection of images submitted by Instagram users. All the images in this exhibit were deleted off Instagram due to violations of community guidelines”. These images can still be viewed on their website today.

The proliferation of alternative spaces that centre the experiences of people whose bodies, words and existences are policed, moderated and deleted from the online as well as offline realm is a crucial part of dreaming beyond ai. The Algorithm not only surveils but decides who gets to be visible. Beyond its material powers, The Algorithm acts almost as a metaphysical presence in the lives of platform users, especially those who depend on platforms for visibility and income. Projects such as dreaming beyond ai can be utilised as a way to extract ourselves from the posting economy. While perhaps a complete withdrawal from the enormous hold of platforms such as Instagram is untenable or impossible, alternative online spaces must be championed and supported for a respite from algorithmic violence and harassment.

Meme Credits (in order of appearance)

Shadowbanned Video Meme, May 6, 2021

https://www.instagram.com/p/COiVfl8Hr7a/?utm_source=ig_web_copy_link

Community Guidelines Karen Meme, May 29, 2021

https://www.instagram.com/p/CPdmQwuH5bT/?utm_source=ig_web_copy_link

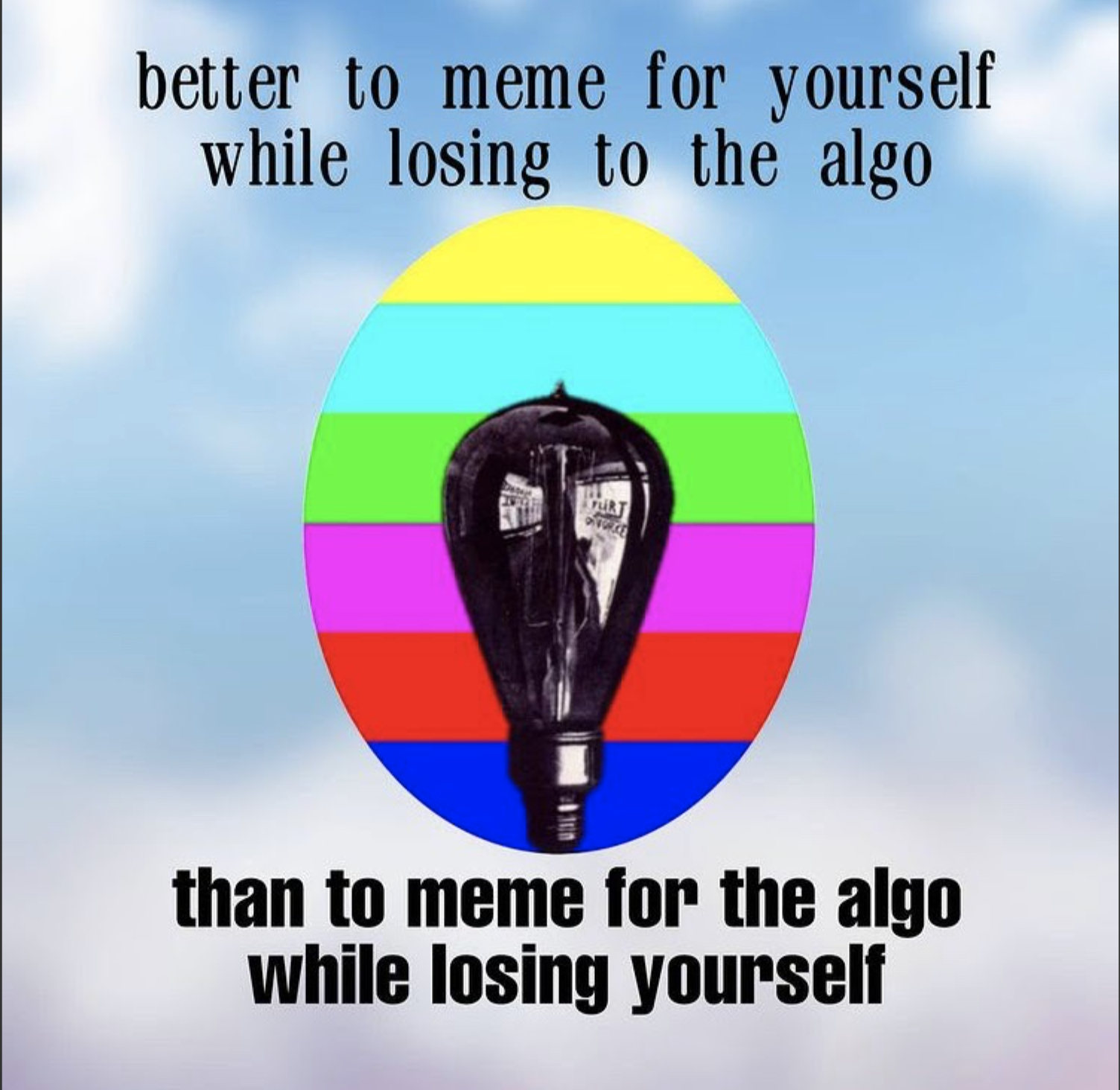

Losing to the Algo Meme, September 29, 2020

https://www.instagram.com/p/CFa87b5DKba/?utm_source=ig_web_copy_link

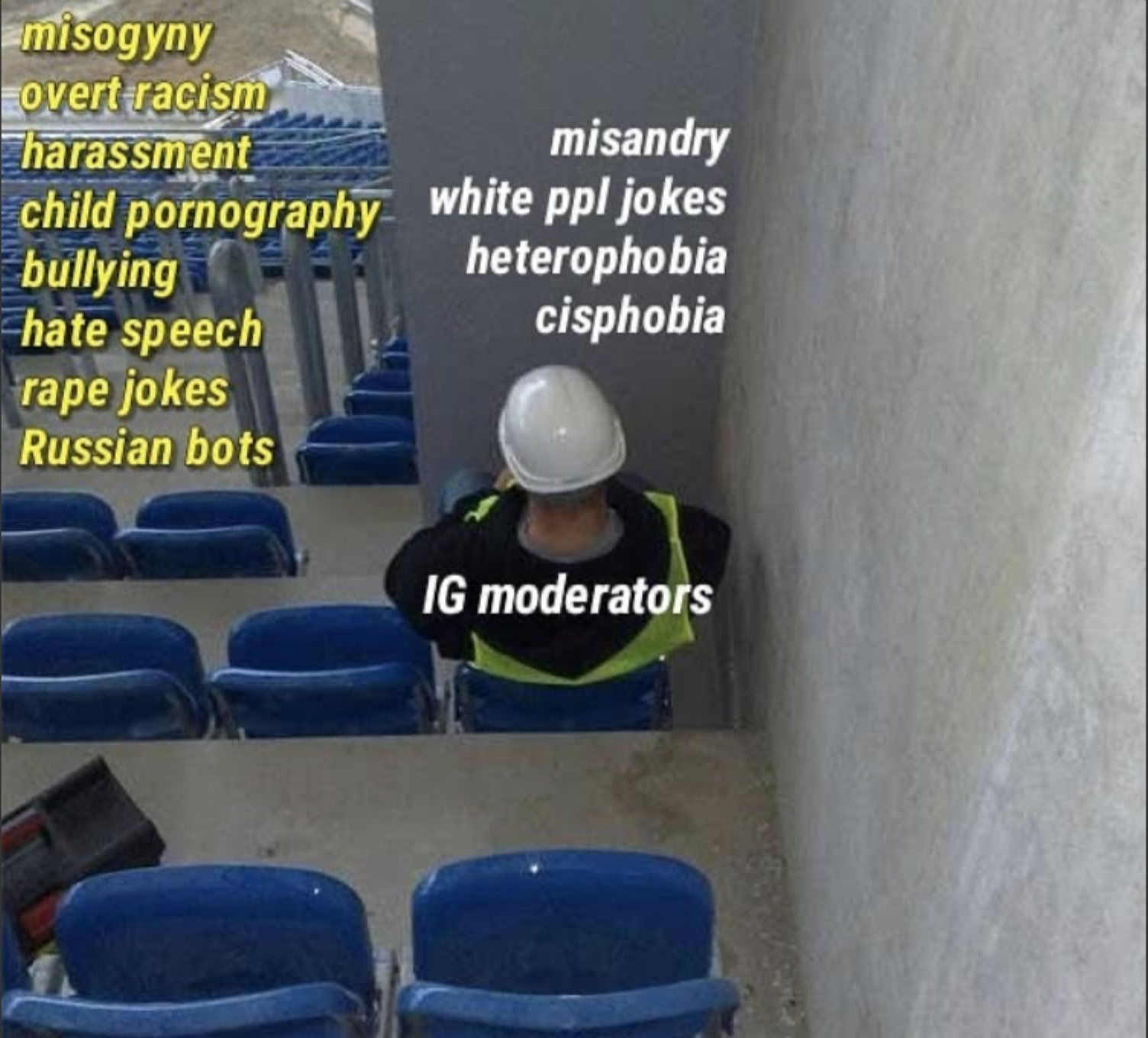

Instagram Moderators Meme, January 24, 2019

https://www.instagram.com/p/Bs_7t0WFX9f/?utm_source=ig_web_copy_link

Reasons why I hate Facebook slides, September 28, 2020

https://www.instagram.com/p/CFqS8tmg_OL/?utm_source=ig_web_copy_link

References

Çalışkan, A. (2021) ‘Detecting and mitigating bias in natural language processing’, The Brookings Institution, https://www.brookings.edu/research/detecting-and-mitigating-bias-in-natural-language-processing/

Çalışkan, A., Bryson, J. J., & Narayanan, A. (2017). Semantics derived automatically from language corpora contain human-like biases. Science, 356(6334), 183-186

Gerrard, Y. and Thornham, H. (2020) ‘Content moderation: Social media’s sexist assemblages’, New Media & Society, 22(7), pp. 1266–1286. doi: 10.1177/1461444820912540.

Gorwa, R., Binns, R. and Katzenbach, C. (2020) ‘Algorithmic content moderation: Technical and political challenges in the automation of platform governance’, Big Data & Society. doi: 10.1177/2053951719897945.

Heilweil, R. (2018) ‘Why algorithms can be racist and sexist’, Vox, https://www.vox.com/recode/2020/2/18/21121286/algorithms-bias-discrimination-facial-recognition-transparency

Lang, C. (2020) ‘How the ‘Karen Meme’ Confronts the Violent History of White Womanhood’, TIME, https://web.archive.org/web/20210111213845/https://time.com/5857023/karen-meme-history-meaning/

Peters, A. (2020) ‘Nyome Nicholas-Williams took on Instagram censorship and won’, Dazed Digital, https://www.dazeddigital.com/life-culture/article/50273/1/nyome-nicholas-williams-instagram-black-plus-size-censorship-nudity-review

Tonic, G. (2020) ‘How Three Women Forced Instagram to Change Its Nudity Policy’, VICE, https://www.vice.com/en/article/y3gz8m/instagram-nudity-policy-iwanttoseenyome